前言

在ffmpeg命令行中,ffmpeg -i test -pix_fmt rgb24 test.rgb,会自动打开ff_vf_scale滤镜,本章主要追踪这个流程。

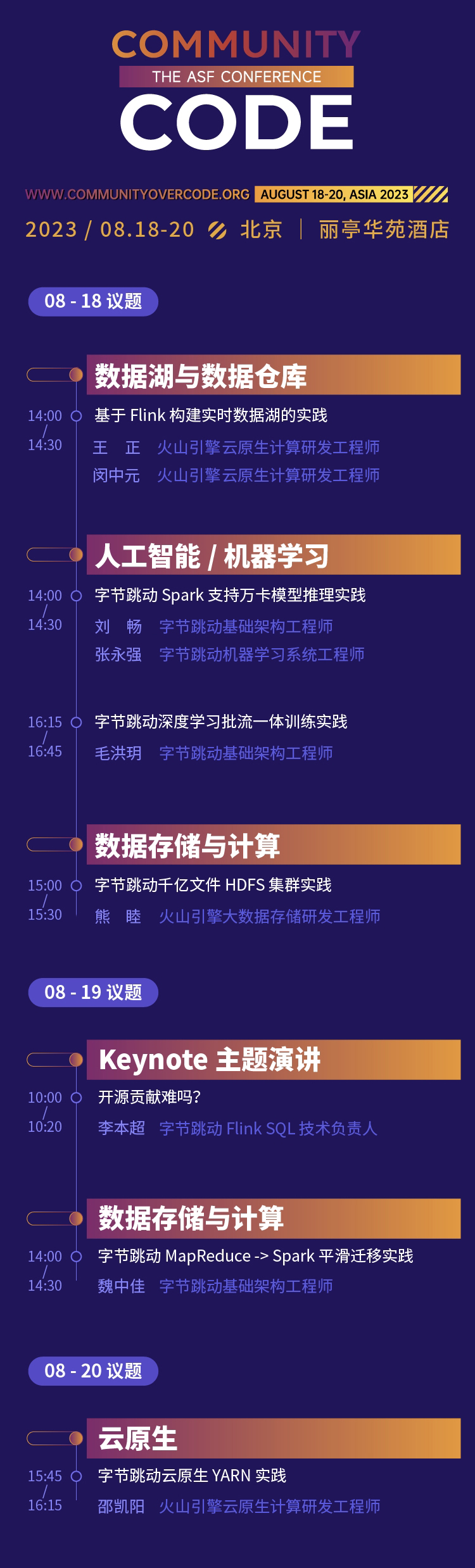

通过gdb可以发现其基本调用栈如下:

可以看到,query_formats()中创建的vf_scale滤镜,

这是ffmpeg滤镜框架中的操作,当avfilter进行query format的时候,如果发现前后两个filter的pixformat不一致的时候就会在中间插入一个vf_scale的滤镜。这就是标题的答案。

但是本章内容主要讨论ffmpeg工具里面是如何调用avfilter的,也就是从哪里开始创建,哪里开始销毁,以及中间是如何传递信息的。特别是当命令行中没有vf_scale的操作时,ffmpeg工具是否会打开filter?

为了分析上述问题,我们先用一定使用到vf_scale的命令:

ffmpeg -i test -pix_fmt rgb24 test.rgb

先找到调用avfilter的两个函数:

ret = av_buffersrc_add_frame_flags(ifilter->filter, frame, buffersrc_flags);

ret = av_buffersink_get_frame_flags(filter, filtered_frame,AV_BUFFERSINK_FLAG_NO_REQUEST);

ffmpeg.h中定义了有关filter的三个结构体:

typedef struct InputFilter {

AVFilterContext *filter;

struct InputStream *ist;

struct FilterGraph *graph;

uint8_t *name;

enum AVMediaType type; // AVMEDIA_TYPE_SUBTITLE for sub2video

AVFifoBuffer *frame_queue;

// parameters configured for this input

int format;

int width, height;

AVRational sample_aspect_ratio;

int sample_rate;

int channels;

uint64_t channel_layout;

AVBufferRef *hw_frames_ctx;

int32_t *displaymatrix;

int eof;

} InputFilter;

typedef struct OutputFilter {

AVFilterContext *filter;

struct OutputStream *ost;

struct FilterGraph *graph;

uint8_t *name;

/* temporary storage until stream maps are processed */

AVFilterInOut *out_tmp;

enum AVMediaType type;

/* desired output stream properties */

int width, height;

AVRational frame_rate;

int format;

int sample_rate;

uint64_t channel_layout;

// those are only set if no format is specified and the encoder gives us multiple options

// They point directly to the relevant lists of the encoder.

const int *formats;

const uint64_t *channel_layouts;

const int *sample_rates;

} OutputFilter;

typedef struct FilterGraph {

int index;

const char *graph_desc;

AVFilterGraph *graph;

int reconfiguration;

// true when the filtergraph contains only meta filters

// that do not modify the frame data

int is_meta;

InputFilter **inputs;

int nb_inputs;

OutputFilter **outputs;

int nb_outputs;

} FilterGraph;

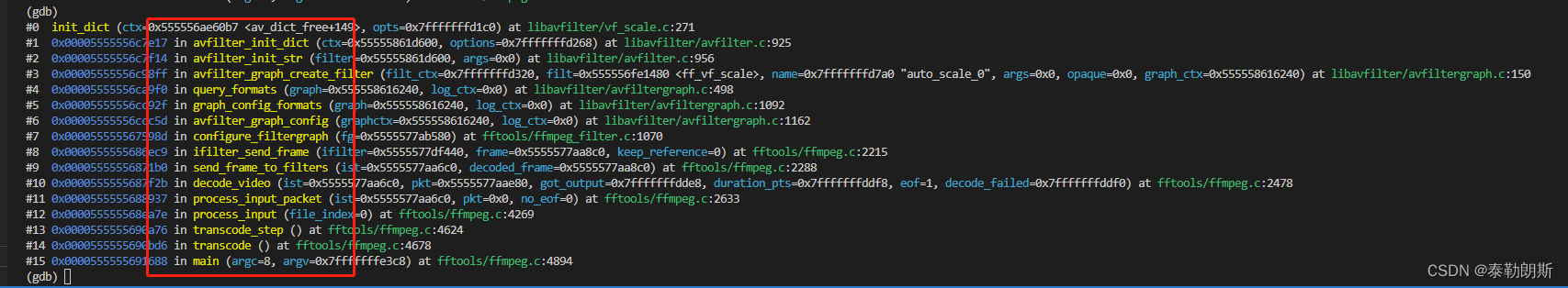

我们通过上述线索寻找buffersrc和buffersink是在哪里创建的。

首先看buffersink,通过:

decode_video(InputStream *ist, AVPacket *pkt, int *got_output, int64_t *duration_pts, int eof,int *decode_failed)

decode(ist->dec_ctx, decoded_frame, got_output, pkt)

send_frame_to_filters(ist, decoded_frame)

ifilter_send_frame(ist->filters[i], decoded_frame, i < ist->nb_filters - 1)

configure_filtergraph(fg)//reinit

av_buffersrc_add_frame_flags(ifilter->filter, frame, buffersrc_flags)

通过上面结构可知其中的configure_filtergraph(fg)//reinit是关键,在这里初始化整个avfilter。

在这里,我们回顾一下avfilter的关键调用流程:

char args[512];

int ret = 0;

const AVFilter *buffersrc = avfilter_get_by_name("buffer");

const AVFilter *buffersink = avfilter_get_by_name("buffersink");

AVFilterInOut *outputs = avfilter_inout_alloc();

AVFilterInOut *inputs = avfilter_inout_alloc();

AVRational time_base = fmt_ctx->streams[video_stream_index]->time_base;

enum AVPixelFormat pix_fmts[] = { AV_PIX_FMT_GRAY8, AV_PIX_FMT_NONE };

filter_graph = avfilter_graph_alloc();

if (!outputs || !inputs || !filter_graph) {

ret = AVERROR(ENOMEM);

goto end;

}

/* buffer video source: the decoded frames from the decoder will be inserted here. */

snprintf(args, sizeof(args),

"video_size=%dx%d:pix_fmt=%d:time_base=%d/%d:pixel_aspect=%d/%d",

dec_ctx->width, dec_ctx->height, dec_ctx->pix_fmt,

time_base.num, time_base.den,

dec_ctx->sample_aspect_ratio.num, dec_ctx->sample_aspect_ratio.den);

ret = avfilter_graph_create_filter(&buffersrc_ctx, buffersrc, "in",

args, NULL, filter_graph);

if (ret < 0) {

av_log(NULL, AV_LOG_ERROR, "Cannot create buffer source\n");

goto end;

}

/* buffer video sink: to terminate the filter chain. */

ret = avfilter_graph_create_filter(&buffersink_ctx, buffersink, "out",

NULL, NULL, filter_graph);

if (ret < 0) {

av_log(NULL, AV_LOG_ERROR, "Cannot create buffer sink\n");

goto end;

}

ret = av_opt_set_int_list(buffersink_ctx, "pix_fmts", pix_fmts,

AV_PIX_FMT_NONE, AV_OPT_SEARCH_CHILDREN);

if (ret < 0) {

av_log(NULL, AV_LOG_ERROR, "Cannot set output pixel format\n");

goto end;

}

outputs->name = av_strdup("in");

outputs->filter_ctx = buffersrc_ctx;

outputs->pad_idx = 0;

outputs->next = NULL;

/*

* The buffer sink input must be connected to the output pad of

* the last filter described by filters_descr; since the last

* filter output label is not specified, it is set to "out" by

* default.

*/

inputs->name = av_strdup("out");

inputs->filter_ctx = buffersink_ctx;

inputs->pad_idx = 0;

inputs->next = NULL;

if ((ret = avfilter_graph_parse_ptr(filter_graph, filters_descr,

&inputs, &outputs, NULL)) < 0)

goto end;

if ((ret = avfilter_graph_config(filter_graph, NULL)) < 0)

goto end;

...

回到ifilter_send_frame()中来:

static int ifilter_send_frame(InputFilter *ifilter, AVFrame *frame, int keep_reference)

{

FilterGraph *fg = ifilter->graph;

AVFrameSideData *sd;

int need_reinit, ret;

int buffersrc_flags = AV_BUFFERSRC_FLAG_PUSH;

if (keep_reference)

buffersrc_flags |= AV_BUFFERSRC_FLAG_KEEP_REF;

/* determine if the parameters for this input changed */

//如果输入和frame中的format不一致,就会引起reinit

need_reinit = ifilter->format != frame->format;

switch (ifilter->ist->st->codecpar->codec_type) {

case AVMEDIA_TYPE_VIDEO:

need_reinit |= ifilter->width != frame->width ||

ifilter->height != frame->height;

break;

}

if (!ifilter->ist->reinit_filters && fg->graph)

need_reinit = 0;

if (!!ifilter->hw_frames_ctx != !!frame->hw_frames_ctx ||

(ifilter->hw_frames_ctx && ifilter->hw_frames_ctx->data != frame->hw_frames_ctx->data))

need_reinit = 1;

if (sd = av_frame_get_side_data(frame, AV_FRAME_DATA_DISPLAYMATRIX)) {

if (!ifilter->displaymatrix || memcmp(sd->data, ifilter->displaymatrix, sizeof(int32_t) * 9))

need_reinit = 1;

} else if (ifilter->displaymatrix)

need_reinit = 1;

if (need_reinit) {

//ifilter从这里获取到w,h,pix等信息

ret = ifilter_parameters_from_frame(ifilter, frame);

if (ret < 0)

return ret;

}

/* (re)init the graph if possible, otherwise buffer the frame and return */

if (need_reinit || !fg->graph) {

ret = configure_filtergraph(fg);

if (ret < 0) {

av_log(NULL, AV_LOG_ERROR, "Error reinitializing filters!\n");

return ret;

}

}

ret = av_buffersrc_add_frame_flags(ifilter->filter, frame, buffersrc_flags);

if (ret < 0) {

if (ret != AVERROR_EOF)

av_log(NULL, AV_LOG_ERROR, "Error while filtering: %s\n", av_err2str(ret));

return ret;

}

return 0;

}

继续回到onfigure_filtergraph(fg) 删除了与本题无关的代码:

int configure_filtergraph(FilterGraph *fg)

{

AVFilterInOut *inputs, *outputs, *cur;

//这里判断是否是simple,因为我们的命令行中没有avfilter,故此为true

int ret, i, simple = filtergraph_is_simple(fg);

//int filtergraph_is_simple(FilterGraph *fg)

//{return !fg->graph_desc;}

//这里其实就是NULL

const char *graph_desc = simple ? fg->outputs[0]->ost->avfilter :fg->graph_desc;

cleanup_filtergraph(fg);

//创建图

if (!(fg->graph = avfilter_graph_alloc()))

return AVERROR(ENOMEM);

if (simple) {

//获取到outputstream

OutputStream *ost = fg->outputs[0]->ost;

char args[512];

const AVDictionaryEntry *e = NULL;

} else {

fg->graph->nb_threads = filter_complex_nbthreads;

}

//这里在我们这里可以跳过,因为graph_desc为null

if ((ret = avfilter_graph_parse2(fg->graph, graph_desc, &inputs, &outputs)) < 0)

goto fail;

//这里是配置buffersrc的地方

for (cur = inputs, i = 0; cur; cur = cur->next, i++){

if ((ret = configure_input_filter(fg, fg->inputs[i], cur)) < 0) {

avfilter_inout_free(&inputs);

avfilter_inout_free(&outputs);

goto fail;

}

}

avfilter_inout_free(&inputs);

//这里是配置buffersink的地方

for (cur = outputs, i = 0; cur; cur = cur->next, i++)

configure_output_filter(fg, fg->outputs[i], cur);

avfilter_inout_free(&outputs);

if (!auto_conversion_filters)

avfilter_graph_set_auto_convert(fg->graph, AVFILTER_AUTO_CONVERT_NONE);

//avfilter的标准调用

if ((ret = avfilter_graph_config(fg->graph, NULL)) < 0)

goto fail;

fg->is_meta = graph_is_meta(fg->graph);

return 0;

}

接下来继续看如何配置input_filter的:

static int configure_input_video_filter(FilterGraph *fg, InputFilter *ifilter,

AVFilterInOut *in)

{

AVFilterContext *last_filter;

//创建buffer

const AVFilter *buffer_filt = avfilter_get_by_name("buffer");

const AVPixFmtDescriptor *desc;

InputStream *ist = ifilter->ist;

InputFile *f = input_files[ist->file_index];

AVRational tb = ist->framerate.num ? av_inv_q(ist->framerate) :

ist->st->time_base;

AVRational fr = ist->framerate;

AVRational sar;

AVBPrint args;

char name[255];

int ret, pad_idx = 0;

int64_t tsoffset = 0;

AVBufferSrcParameters *par = av_buffersrc_parameters_alloc();

if (!par)

return AVERROR(ENOMEM);

memset(par, 0, sizeof(*par));

par->format = AV_PIX_FMT_NONE;

if (!fr.num)

fr = av_guess_frame_rate(input_files[ist->file_index]->ctx, ist->st, NULL);

sar = ifilter->sample_aspect_ratio;

if(!sar.den)

sar = (AVRational){0,1};

av_bprint_init(&args, 0, AV_BPRINT_SIZE_AUTOMATIC);

//这里是配置buffersrc的地方

av_bprintf(&args,

"video_size=%dx%d:pix_fmt=%d:time_base=%d/%d:"

"pixel_aspect=%d/%d",

ifilter->width, ifilter->height, ifilter->format,

tb.num, tb.den, sar.num, sar.den);

if (fr.num && fr.den)

av_bprintf(&args, ":frame_rate=%d/%d", fr.num, fr.den);

snprintf(name, sizeof(name), "graph %d input from stream %d:%d", fg->index,

ist->file_index, ist->st->index);

//创建filterctx

if ((ret = avfilter_graph_create_filter(&ifilter->filter, buffer_filt, name,

args.str, NULL, fg->graph)) < 0)

goto fail;

par->hw_frames_ctx = ifilter->hw_frames_ctx;

ret = av_buffersrc_parameters_set(ifilter->filter, par);

if (ret < 0)

goto fail;

av_freep(&par);

last_filter = ifilter->filter;

desc = av_pix_fmt_desc_get(ifilter->format);

av_assert0(desc);

snprintf(name, sizeof(name), "trim_in_%d_%d",ist->file_index, ist->st->index);

if (copy_ts) {

tsoffset = f->start_time == AV_NOPTS_VALUE ? 0 : f->start_time;

if (!start_at_zero && f->ctx->start_time != AV_NOPTS_VALUE)

tsoffset += f->ctx->start_time;

}

//插入trim

ret = insert_trim(((f->start_time == AV_NOPTS_VALUE) || !f->accurate_seek) ?

AV_NOPTS_VALUE : tsoffset, f->recording_time,

&last_filter, &pad_idx, name);

if (ret < 0)

return ret;

//链接

if ((ret = avfilter_link(last_filter, 0, in->filter_ctx, in->pad_idx)) < 0)

return ret;

return 0;

fail:

av_freep(&par);

return ret;

}

下面是configure_output_filter:

static int configure_output_video_filter(FilterGraph *fg, OutputFilter *ofilter, AVFilterInOut *out)

{

OutputStream *ost = ofilter->ost;

OutputFile *of = output_files[ost->file_index];

AVFilterContext *last_filter = out->filter_ctx;

AVBPrint bprint;

int pad_idx = out->pad_idx;

int ret;

const char *pix_fmts;

char name[255];

snprintf(name, sizeof(name), "out_%d_%d", ost->file_index, ost->index);

//创建buffersink

ret = avfilter_graph_create_filter(&ofilter->filter,

avfilter_get_by_name("buffersink"),

name, NULL, NULL, fg->graph);

if (ret < 0)

return ret;

//这个scale完全就是尺寸的resize

if ((ofilter->width || ofilter->height) && ofilter->ost->autoscale) {

char args[255];

AVFilterContext *filter;

const AVDictionaryEntry *e = NULL;

//这里只有size的scale,并没有颜色空间的转换

snprintf(args, sizeof(args), "%d:%d",ofilter->width, ofilter->height);

while ((e = av_dict_get(ost->sws_dict, "", e,AV_DICT_IGNORE_SUFFIX))) {

av_strlcatf(args, sizeof(args), ":%s=%s", e->key, e->value);

}

snprintf(name, sizeof(name), "scaler_out_%d_%d",ost->file_index, ost->index);

if ((ret = avfilter_graph_create_filter(&filter, avfilter_get_by_name("scale"),

name, args, NULL, fg->graph)) < 0)

return ret;

if ((ret = avfilter_link(last_filter, pad_idx, filter, 0)) < 0)

return ret;

last_filter = filter;

pad_idx = 0;

}

av_bprint_init(&bprint, 0, AV_BPRINT_SIZE_UNLIMITED);

//如果设置了输出的pix_fmt 那么就会在这里增加一个format的的avfilter

//这个format其实什么也不做,就是指定一个中间format,用来在协商的时候

//确定是否增加中间的csc swscale

if ((pix_fmts = choose_pix_fmts(ofilter, &bprint))) {

AVFilterContext *filter;

ret = avfilter_graph_create_filter(&filter,

avfilter_get_by_name("format"),

"format", pix_fmts, NULL, fg->graph);

av_bprint_finalize(&bprint, NULL);

if (ret < 0)

return ret;

if ((ret = avfilter_link(last_filter, pad_idx, filter, 0)) < 0)

return ret;

last_filter = filter;

pad_idx = 0;

}

if (ost->frame_rate.num && 0) {

AVFilterContext *fps;

char args[255];

snprintf(args, sizeof(args), "fps=%d/%d", ost->frame_rate.num,

ost->frame_rate.den);

snprintf(name, sizeof(name), "fps_out_%d_%d",

ost->file_index, ost->index);

ret = avfilter_graph_create_filter(&fps, avfilter_get_by_name("fps"),

name, args, NULL, fg->graph);

if (ret < 0)

return ret;

ret = avfilter_link(last_filter, pad_idx, fps, 0);

if (ret < 0)

return ret;

last_filter = fps;

pad_idx = 0;

}

snprintf(name, sizeof(name), "trim_out_%d_%d",

ost->file_index, ost->index);

ret = insert_trim(of->start_time, of->recording_time,

&last_filter, &pad_idx, name);

if (ret < 0)

return ret;

if ((ret = avfilter_link(last_filter, pad_idx, ofilter->filter, 0)) < 0)

return ret;

return 0;

}

所以对于ffmpeg中的graph来说,依次为:

ff_vsrc_buffer->ff_vf_null->(scale resize)->ff_vf_format(命令行中由format的参数)->(fps )->(trim)->ff_buffersink。

中间括号内的是几个选择的avfilter。

协商format的时候最后会协商一个ff_vsrc_buffer的pix fomat.

最后用一个rawenc去将frame编码为pkt…